The McCulloch Pitts Neuron applies to boolean inputs with equal weightage and linearly separable functions. What about instances where the inputs are not Boolean (real numbers, for example) or some inputs are more important than others? Or what if we’re dealing with functions that are not linearly separable?

Perceptrons are a more broadly applicable version of the MP neurons that were conceived by Frank Rosenblatt, a Psychologist and refined and by Minsky and Papert.

Perceptrons allow weights to be assigned to the inputs and provide a mechanism to learn these weights. Inputs are also not limited to Boolean values.

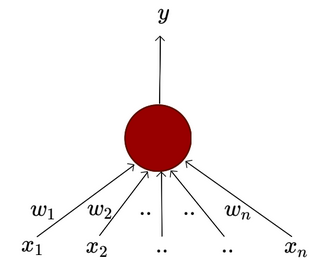

Including the weights for the different inputs, we get the following mathematical picture:

The conventional way of expressing the above inequalities is to have a dummy weight and input such that and . Rewriting the inequalities in this fashion, we get:

The is called the bias, since it represents a prior inclination or prejudice. It acts a threshold that needs to be offset by the sum of the products inputs and their weights. For example, if I am contemplating whether to watch a movie tonight, my bias would be rather low if I’m a movie buff. If not, it would take a lot of convincing, denoting a high bias.

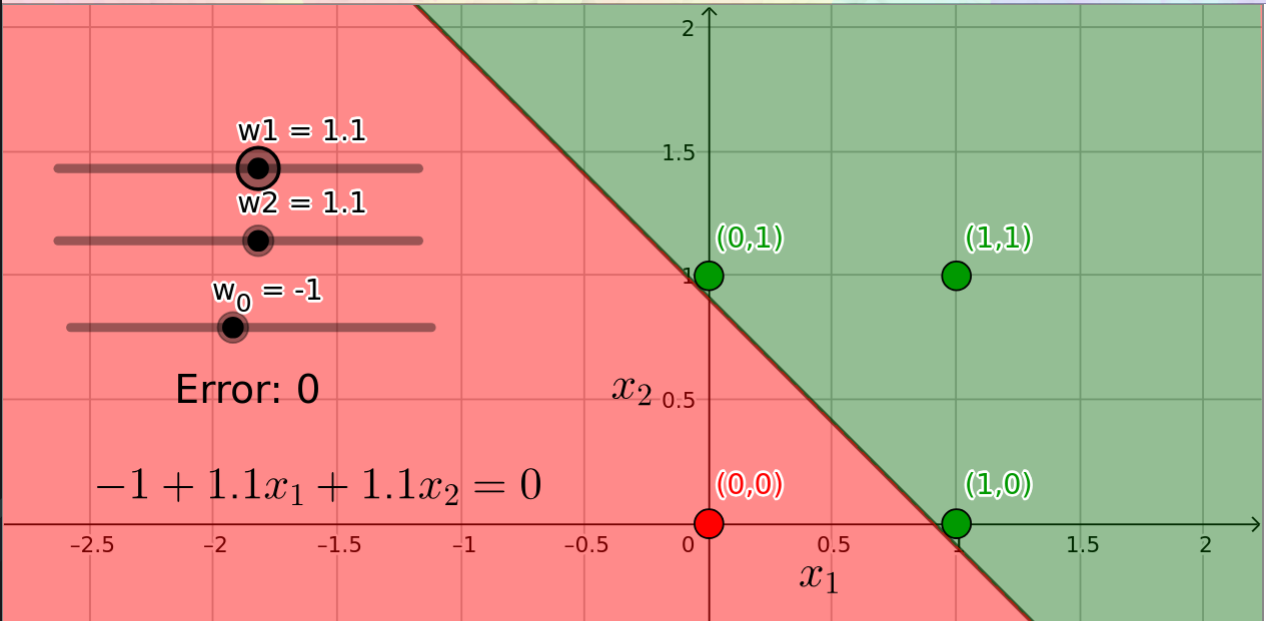

Like MP Neurons, Perceptrons also separate the input space into two halves. Therefore, they too, can only be used to plot linearly separable functions. If we analyze the OR function with 2 inputs, there are four possible input pairings - . Three of these will return true. If are the weights assigned to the two features with being the bias, we get the following set of inequalities:

All valid solutions must satisfy these constraints to be included in the input space for the OR function. Among others, one possible solution to this set is .