If we have two sets and such that the former contains all the positive points in the input space and the latter contains all the negative points, how can we prove that the PLA will converge for a linearly separable dataset? In other words, how can we address the concern from the previous section, that the updating of the weight vector could go on forever, without cleanly separating the positive points from the negative points at the end? For the PLA to be valid the number of times the weights are updated needs to be finite.

Let’s consider a new set . In the RHS, is the negation of , meaning that we flip the signs of all those points for which . Every will satisfy . Further we normalise all the p’s such that . will the solution weight vector for this normalised set.

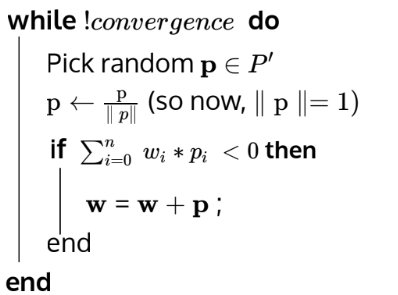

With that established, the following screenshot has the pseudocode for this slightly modified PLA:

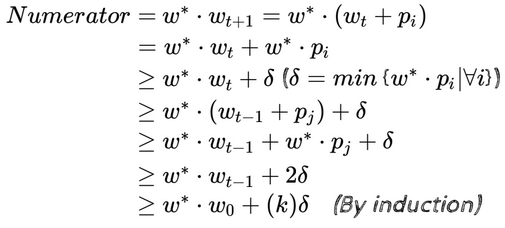

Notice that we have only one if loop this time since all points in are positive. is the optimal solution, but we don’t know what it is yet. It gets updated each time . It need not be updated after every iteration. Hence, after iterations, the total number of updates will be less than . Now, let’s say at the iteration, we see a misclassification for point . We correct that giving us . If is the angle between and :

Upon performing some computations on the numerator and denominator of the above, we get:

Combining the 2 results, we get:

This means that grows proportionally to . As increases, could become arbitrarily large. However, has to be , which means that will be bounded too. This proves that the number of updates made to the weight vector will be a finite and the PLA will converge at some point.