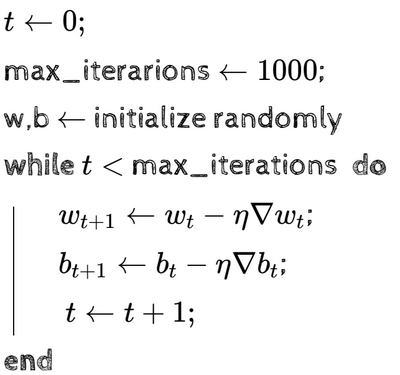

How can we go about learning the parameters of a feedforward neural network? Remember that the gradient descent algorithm for a simple neural network was as follows:

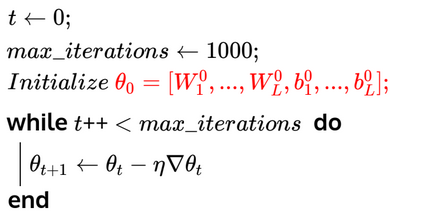

Now, instead of and , we have and . We can put both of these into one vector called , modifying the algorithm to:

Where:

is composed of the gradients of the weight and bias of each layer in the network. So, how can we calculate the loss function and how can we calculate the gradient?