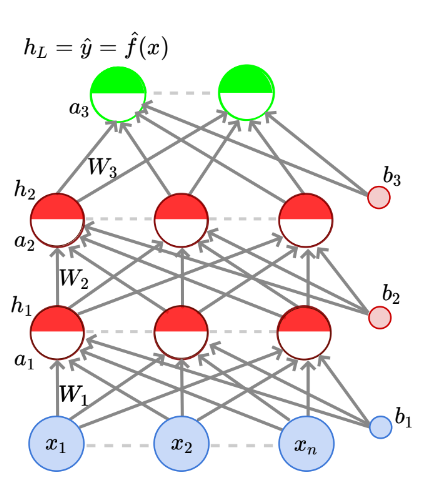

Now that we’ve computed the gradient of the loss function with respect to the output layer, we now move on to the hidden layer. Since there are several hidden layers, we would obviously like to derive a formula that would work for all hidden layers.

Let’s say we’d like to compute the gradient of the loss function with respect to one neuron in the layer in the network, which isn’t the input or output layers.

What we’d like to compute is:

Generally, the derivative of a function , which can be written as a function of some intermediate function is:

- Where represents each computation of the intermediate function.

In our case, the hidden layers determine the output of the layers above them, which in turn determine the loss function. So, the hidden layers would be , the layers above them are generalised as and the loss function is . Applying the above logic to this context:

- refers to the layer being looked at, refers to each neuron in the layer and is the neuron in the layer whose gradient we’re trying to compute.

Now consider two vectors:

- The * means that we take all rows in the column of .

Taking the dot product of the two vectors, we get:

This is equal to the derivative of the loss function with respect to one neuron in the hidden layer. Hence:

We can apply this to the entire layer the neuron is in:

- Where is the number of layers in the network.

The problem here is that except for the output layer , we don’t know how to calculate . We need to be able to compute the gradient with respect to the next hidden layer (i.e., ) in order to compute the same for this one. As always, let’s start off by computing the gradient for a single neuron:

Applying this to the entire vector, we have:

This is an element-wise multiplication of the two vectors. Therefore:

Now that we can calculate the gradient with respect to the layers above a particular hidden layer, we can calculate it for the hidden layer as well. Note that this doesn’t create circular dependency. To compute the gradient w.r.t we need ‘s gradient, but for we need .